Creative Computing Hub Oslo (C2HO) presents a series of workshops focused on ml5.js, a beginner-friendly library that enables you to utilize machine learning directly in your web browser. The library provides access to machine learning algorithms and models in the browser, building on top of TensorFlow.js with no other external dependencies. It includes pre-trained models that allow for recognizing images, detecting human poses, generating text, and more.

In this session we will be working with the pre-trained model SketchRNN.

SketchRNN is a machine learning model developed by Google's Magenta team. It's designed to create sketches of objects and animals. The model is trained on millions of doodles collected from the Quick, Draw! game. What makes SketchRNN interesting is that it's not just generating new images, but actually understands some of the structure of what it's drawing. It uses a type of model called a recurrent neural network, which is particularly good at understanding sequences of data, which is exactly what a drawing is - a sequence of strokes over time.

Part 1: Load the model and generate drawings

To start we will use a p5 template which loads the ml5 library. This gives us access to several pre-trained models from tensorflow and today we will be using the ml5 SketchRNN model. When loading the pre-trained model we can choose between several different models trained on various drawings, from cats and dogs to more obscure objects such as rabbit-turtles and yoga-bicycles. See the entire list of models here.

In the script below the index.html file also writes a header for our canvas and prints the status of the SketchRNN model. To follow along with the video above you can use this template to start from scratch:

Since we are loading a pre-trained model, we do not need to add any data or train the model ourselves. Instead, we will start by creating a generate button which will prompt the model to generate pen-strokes for us to draw on our canvas. We implement a function generateDrawing which will be called when the generate button is pressed. Here, we can utilize the ml5 function which makes sketchRNN start drawing.

When we use the ml5 function generate the model will return a series of pen strokes, we will use the function gotResults to handle these results. To get a better understanding of what the model returns we can print the results to our console window using the console.log function.

SketchRNN generates the position and state of the pen. The pen can be in one of three states: up, down and end. To draw these lines to our canvas we will populate our draw function. When the pen is down we will draw a line until it's state changes to end.

Part 2: Generate multiple drawings

By adding a call to background inside our generateDrawing function we clear the previously generated image and draw a new one every time we press the generate button. If we instead want to fill our canvas with different drawings we can remove this line of code and make a small change to the location of the initial stroke of the model.

We can also explore some different values for our drawings by changing the strokeWeight and stroke color, or choose these values randomly using the p5 random function.

Part 3: Selecting different models

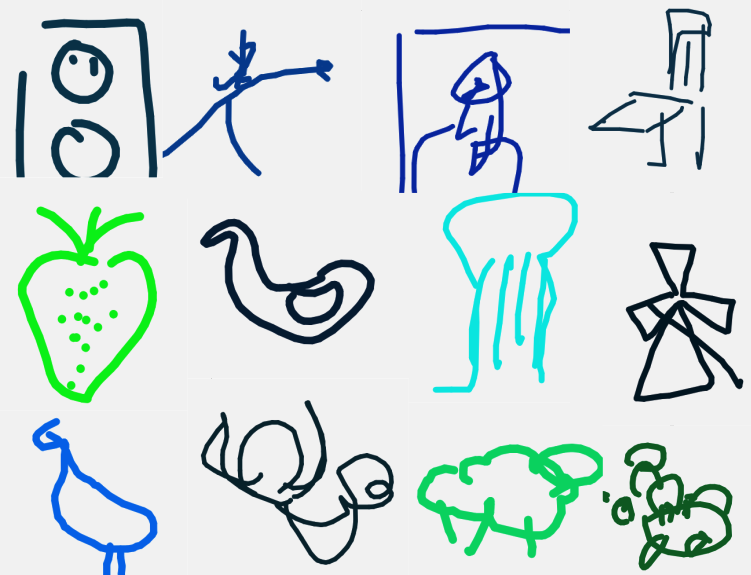

We can choose between many different sketchRNN models through ml5. In this section we will create a list of all the different models and choose a new model every time we press the generate button. Again, we will use the p5 random function so we dont know which model is being loaded - this way you can play a game of pictionary against the sketchRNN by trying to guess what the model is attempting to draw. Here are some examples:

Part 4: Co-creating with SketchRNN

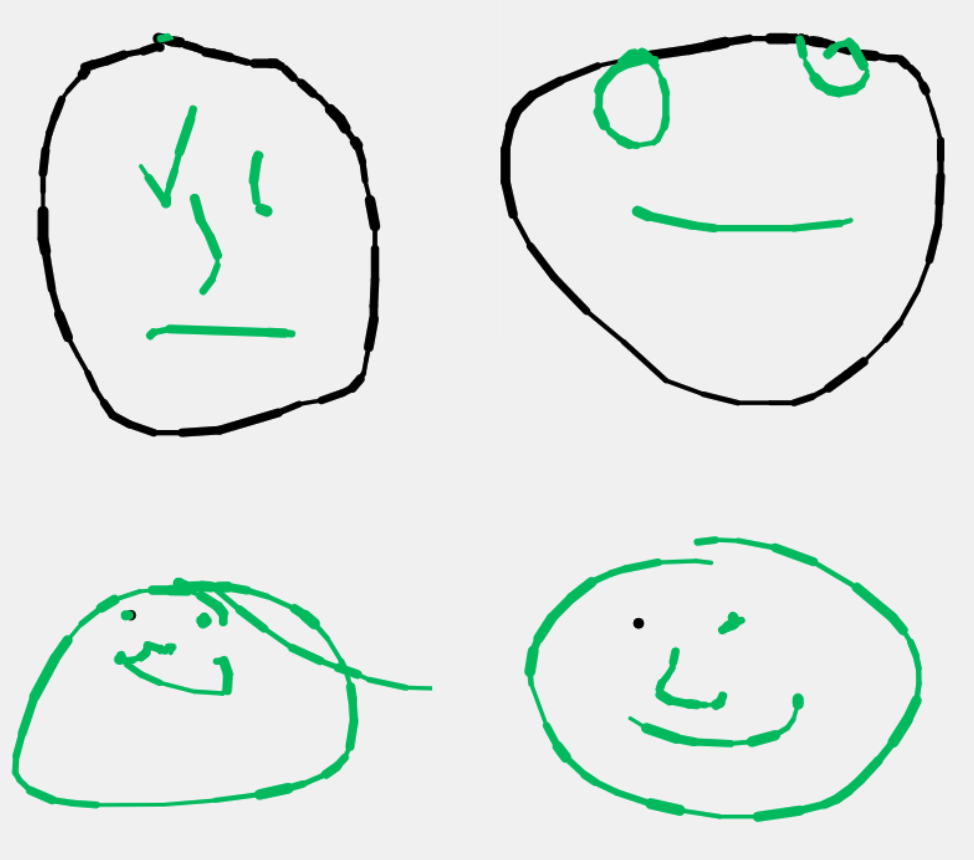

Finally, lets let the sketchRNN receive input from our own pen strokes. To achieve this we create a seperate stroke path for user input inside our draw function. We will also create a function startSketchRNN which is called when a release of the mouse is detected inside our canvas. This way the sketchRNN model will use our previous pen stroke as a starting point and attempt to continue the drawing in a sensical way. If we load the face model for example, sketchRNN will try to create a face based on whatever we draw.

Sometimes the model works better and sometimes worse, so we add a button to clear the current drawing and reset the model for a new prompt. We also add a dropdown menu so that we can select different models depending on what we want to draw. By using the p5 function changed we can make sure the model changes when we select a new model name from our dropdown menu.

Going Further

Explore other RNN implementations and train your own:

Resources:

This workshop is based on this tutorial from the ml5.js resources.